Everyone is obsessing over the engine.

We look at the benchmarks for the latest State-of-the-Art (SOTA) models. We marvel at their reasoning capabilities, their massive context windows, and their ability to churn out complex features in seconds.

But there is a massive disconnect in how we are preparing for them.

Most teams are trying to "adopt AI" by buying tools. They are dropping these Ferrari-grade engines into codebases that are essentially dirt roads.

If you want to actually use the power of modern Autonomous Agents, you don't need more prompt engineering. You need higher test coverage.

The Velocity Problem

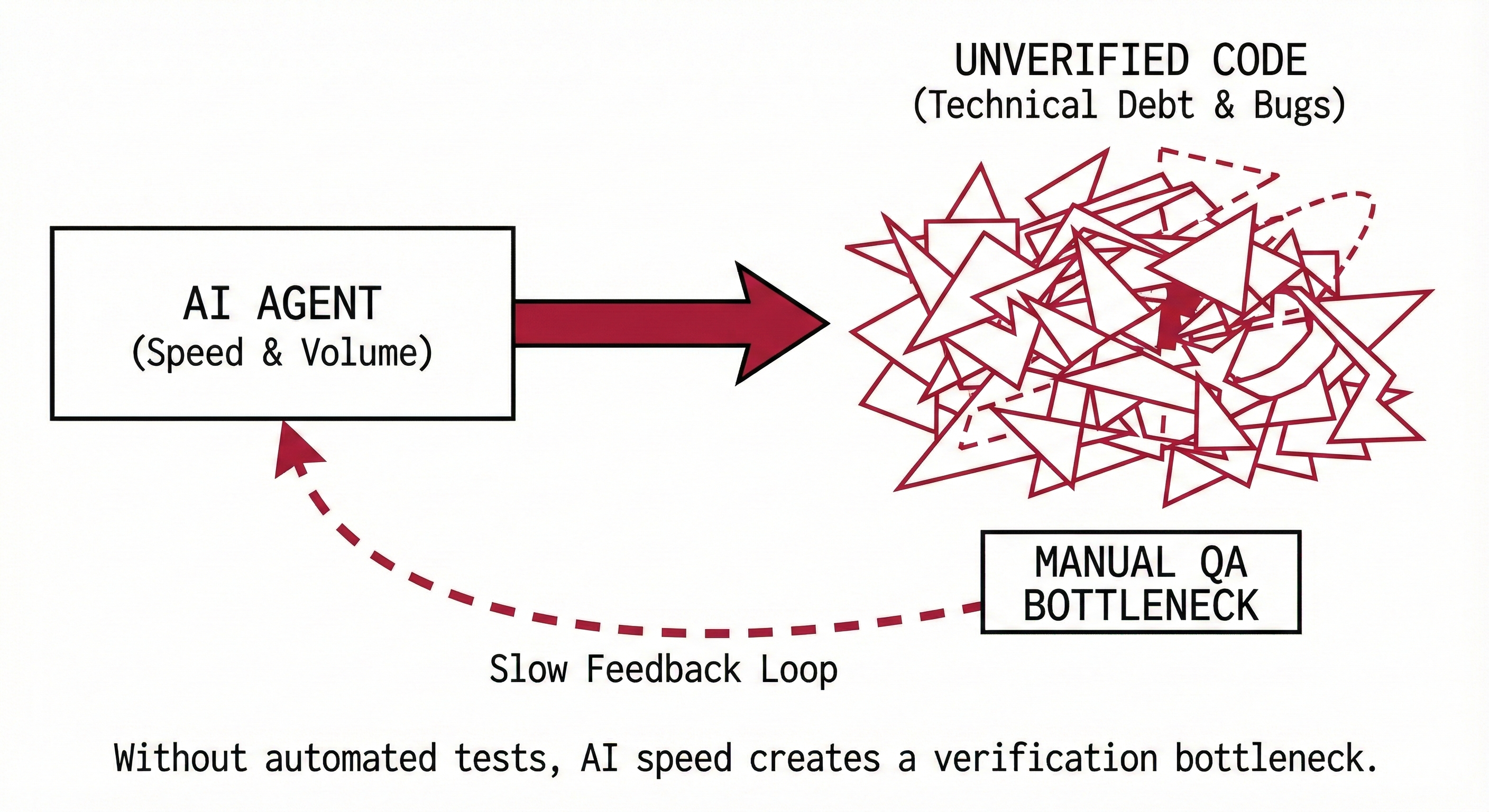

In the pre-AI era, the bottleneck was writing code. In the AI Agent era, the bottleneck is verifying code.

An autonomous agent can generate a week's worth of boilerplate, refactoring, and feature work in an hour. But an agent doesn't "know" if the code works. It relies on signals.

If your codebase relies on manual QA, human code review, or "clicking around to see if it breaks," you have already lost. You cannot manually review code as fast as a modern LLM can write it. You will create a bottleneck so severe that you'll be forced to ask your devs to stop using the tools.

Tests Are the "Tracks"

Think of a comprehensive CI/CD pipeline and a rigid test suite as the railway tracks.

When you have solid tracks:

Agents become autonomous: The agent writes code, runs the test suite, fails, reads the error, corrects itself, and passes. It creates a closed feedback loop.

Speed becomes safe: You can let the AI refactor entire legacy modules because if it breaks a business rule, the system catches it immediately.

Validation scales: The system validates the output, not a burnt-out senior engineer.

Without the tracks? That high-speed bullet train just derails into a field of technical debt.

"AI Readiness" Is Just Good Engineering

We tend to overcomplicate "AI Readiness." We think it means vector databases, RAG pipelines, and fine-tuning.

But the most impactful thing you can do today to prepare for the agents of the future is fix your unit tests.

An AI Agent acts like a junior developer with infinite stamina but zero context.

- If you give it a codebase with 90% coverage, it operates like a Senior Engineer.

- If you give it a codebase with 0% coverage, it operates like a chaos monkey.

The Bottom Line

Stop buying AI tools if you aren't willing to invest in the infrastructure they run on.

The models are ready. The engines are powerful. But unless you lay the tracks down now, you aren't going anywhere fast.

Fix your tests. Then let the agents run.